Uptake matters: Why evidence and communications go hand in hand

For evidence to be useful, it must be used.

This statement is hardly controversial, and yet, it is surprisingly common for state-of-the-art research to waste away in online archives.

Case in point: In 2014, the World Bank used download and citation data to study which of its reports were widely read. Despite spending about a quarter of its budget for country services on knowledge products, it turned out that only 13% of reports were downloaded more than 250 times. Alarmingly, more than 31% of policy reports had never been downloaded, and almost 87% had never been cited. Ironically, this particular working paper received widespread interest. A graph of data from the paper was published online with the distressing title “Nobody is reading your pdf,” enough to make anybody with “researcher” in their job title shudder.

Fortunately, the numbers at the IEU are different. Of the evaluation reports published in 2021, all have been downloaded more than 250 times, and one was downloaded as many as 800 times. Older reports also continue to be downloaded often; the final report of the landmark Forward-Looking Performance Review, the first performance review of the GCF by the IEU, was downloaded 350 times in 2021, despite being first published in 2019. IEU’s learning and working papers are generally downloaded at least 100 times within the first year of publication, and even shorter documents like the 4-page GEvalBriefs for the recent SIDS and Adaptation evaluations have been downloaded over 100 times.

This has not come effortlessly. The IEU’s uptake, communications and partnerships work exists to make sure that the IEU’s evaluations, capacity building and learning work are compellingly presented and disseminated to the right audiences. We put an honour in pursuing the most effective avenues to get evidence in front of those who can use it in decision-making.

Most public organizations whose purpose centres on knowledge production have a mandate that includes informing the public. The IEU is no exception – outreach takes up a strong presence in our guiding document. The IEU exists to inform decision-making in the GCF Board and identify and disseminate lessons learned. We do this mainly through our evaluations, but also through our explicit mandate of synthesizing the findings and lessons learned from our evaluations to inform not only the GCF Board, but also the GCF Secretariat, National Designated Authorities (NDAs), implementing entities, observer organizations, etc. The IEU is also asked to maintain close relationships with peer evaluation offices and other relevant stakeholders.

Communicating evaluations

The task given to the IEU by the GCF Board identifies receivers of knowledge (the audiences above) and a sender (the IEU). For communication to take place, active and effective transmission of knowledge needs to happen. That means that, without even mentioning the relationship-building aspect, a good part of the IEU mandate hinges on good communications work.

At the IEU, our efforts to ensure uptake include:

- Multimedia content. While the final evaluation reports contain the most comprehensive information about the evaluation’s methods, findings and recommendations, they also take hours to read from cover to cover. To give the evidence the best chance to be absorbed by decision-makers, the key messages need to be selected and be made accessible. Multimedia content plays a major role in helping us solve that task, both in the form of videos and our ongoing podcast series ‘The Evaluator’. By creating multi-platform content related to our key activities, audiences can encounter our most important messages on video, audio, text and through events.

- A strong online presence. The website functions as a repository of resources and hosts all our key documents—our workplans, newsletters, evaluation knowledge products, learning and working papers, blogs, press releases, event recordings, podcast episodes and ‘Spotlight’ videos are all available from there.

- Hosting and participating in events. Events are ways for us to start or contribute to conversations between our evaluation experts and stakeholders inside and outside the GCF. In the context of evaluations, events help us learn from peers and raise awareness of our findings. To that end, we place high value on participating in events like the 2021 Asian Evaluation Week, the Global Development Conference and not least COP26, where we hosted and spoke at a record 13 events at the Conference venue in Glasgow.

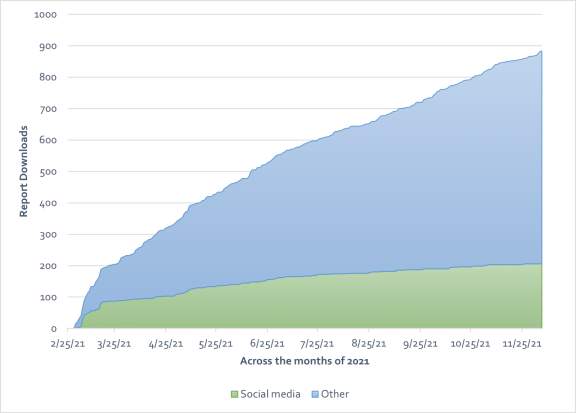

While it’s difficult to measure the results of some of these efforts, others can be quantified easily. By looking at analytics data from our website, we discover that 34% of evaluation report readers access the documents through links on social media, newsletters or partner websites. This lets us know that our outreach efforts to direct readers to the reports are paying off.

Figure 1 – Number of downloads of the #Adapt2020 evaluation report across 2021 by source: social media vs. others.

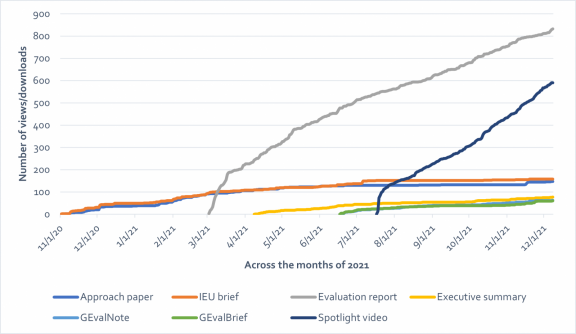

We also know that our ‘Spotlight’ video series is quite popular on YouTube. Our ‘Spotlight’ videos are 5 to 7-minutes long and include interviews with the team behind each evaluation. Our aim with them is to give our audience a different and more approachable way of digesting the key findings and recommendations of our evaluations. In most cases, the Spotlight videos reach almost as big of an audience as the final evaluation reports, and sometimes even bigger.

Figure 2 – Cumulative number of views/downloads of #Adapt2020 evaluation products across 2021.

Source: Analytics data from the IEU microsite and YouTube.

Evaluating communications

But of course, not every creative communications idea works out, and we frequently evaluate our efforts also within communications and uptake. Earlier this year, we undertook a review of the IEU microsite (ieu.greenclimate.fund). In true evaluation spirit, we used data from multiple sources to learn more about what works and what doesn’t. We sought individual feedback from our own team, conducted live user testing with users outside of the IEU and dove deeper into quantitative analytics data on views and downloads. Analyzing that data, we identified a number of areas where we can do better, and we are currently in the midst of implementing some of our ideas for site improvements.

In that way, we not only communicate our evaluations, but we also evaluate our communications. We take our dissemination task seriously because it is vital to delivering on our mandate; Usefulness of evidence is predicated on ‘use’, and so, we continue to do everything we can to create the best possible conditions for our evidence to be used.

What can we do better to make our findings accessible? If you have ideas on how we can make our knowledge products more useful, please get in touch with our Communications Officer, Yeonji Kim

Disclaimer: The views expressed in blogs are the author's own and do not necessarily reflect the views of the Independent Evaluation Unit of the Green Climate Fund.