Designing an impact evaluation in six steps

How do we measure the impact of a project? For instance, how do we know if a COVID-19 vaccine is effective? The answer is through impact evaluation!

It generates vital information on whether an intervention truly works by measuring the change that can be solely attributed to the project. This blog introduces you to the six steps for embedding an impact evaluation within a climate project.

I have been deeply involved with impact assessment, supporting the Independent Evaluation Unit’s Learning-Oriented Real-Time Impact Assessment (LORTA) programme, which offers technical assistance within GCF project implementation, monitoring, and evaluation activities. I was privileged to support, in 2020, the first LORTA virtual design workshop over 9 weeks with over 80 participants, and the six steps I will outline here are a summary of it.

The author attends and supports the IEU’s first LORTA virtual design workshop, held in 2020.

1. Theory of Change

The first step of designing impact evaluation is to develop a Theory of Change (ToC). A Theory of Change is a depiction of ‘program logic’ (Clark & Taplin, 2012), which illustrates how particular inputs are expected to lead to outputs, intermediate and final intended outcomes (Rogers, 2014).

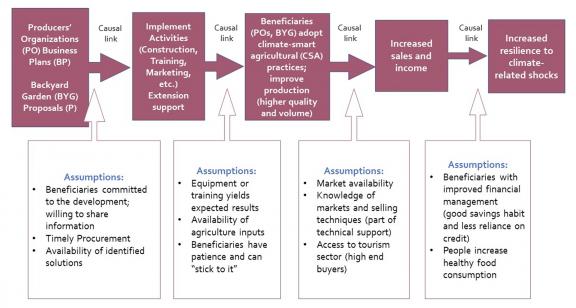

As an example, Figure 1 shows the sequence of project activities, output, outcomes, and impacts of a GCF project titled “Resilient Rural Belize”, which aims to increase the resilience of smallholder farmers in Belize to climate change impacts. Between each node in a ToC, assumptions display the conditions which must hold for one node to progress as expected to the next. Generating an indicator for each node and assumption allows evaluators to see where along the causal chain the project has worked as expected and where it hasn’t. The ToC can be periodically refined based on evidence collected during implementation and from M&E activities (Clark & Taplin, 2012).

Figure 1. A flow chart for GCF’s FP101 – Resilient Rural Belize

2. Evaluation Questions and Indicators

Drawing on the Theory of Change, you need to define a clear and answerable set of evaluation questions. The main question focuses on the causal impact of a project on outcomes of interest (Gertler et al., 2016). Using the Resilient Rural Belize project as an example again, its overarching question is: “Do the business plan and backyard garden proposals increase households’ resilience to climate-related shocks?” This overarching question can be broken down into subsidiary questions such as “Do the backyard garden proposals lead to an increase in income?” At this stage, abstract concepts like resilience need to be clearly defined with relevant indicators, such as people’s savings, assets, healthy food consumption, and reduced reliance on credit. These subsidiary questions shed light on different components of the project. A robust ToC facilitates this process significantly as it guides the evaluation approach (Clark and Taplin, 2012).

3. Experimental methods

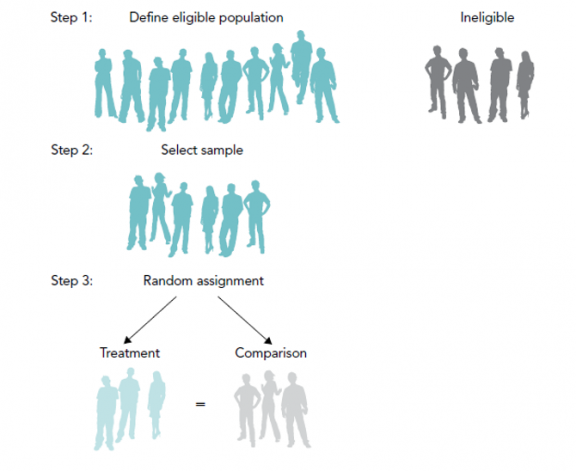

Next, it needs to be determined whether an experimental design is appropriate in assessing your project. A randomized controlled trial (RCT), or an experimental method, obtains estimates of project impacts by randomly assigning the treated and non-treated groups from the target population. Figure 2 displays the process of a classical RCT.

Figure 2. Process of Classical Randomized Controlled Trials

Source: Hempel and Fiala (2011)

The Resilient Rural Belize project first identifies 4,000 eligible households who will receive the backyard garden intervention. The eligibility criteria may contain various demographic characteristics such as income, occupation, and residence. Then, 1,000 households are randomly selected to build backyard gardens, and another 1,000 households receive the backyard garden intervention at a later time. As the intervention is randomly assigned, their characteristics should balance between the treatment and comparison groups. This approach tries to limit confounding factors, such as when elites or particular social groups self-select into treatment groups. Once the intervention is implemented, the evaluators measure the difference in outcome indicators between the treatment and comparison groups. There are different approaches to random assignment, which can be classified into four types of RCT: a classical RCT, a phase-in RCT, a random encouragement design, and a clustered randomization design. The choice of an impact evaluation method depends on the operational characteristics of the program being evaluated (Gertler et al, 2016).

4. Non-experimental methods

Randomization is not always desirable or practical due to administrative, budgetary, or political reasons. In this case, evaluators can use non-experimental methods. A non-experimental or a quasi-experimental method often creates an artificial comparison group, in contrast to an experimental method. Four quasi-experimental approaches are widely adopted, three of which utilize a comparison group: difference-in-difference designs, propensity score matching, and regression discontinuity design. An additional method that doesn’t use a comparison group is instrumental variable regression.

5. Sample size and power calculation

If taking an experimental approach, researchers need to ascertain the sample size and statistical power they want for analysis. However, collecting more data is costly, so sample size should be a compromise between budget constraints and evaluation quality. In this respect, power calculations help determine the sufficient sample size for finding statistically significant intervention effects (White and Raitzer, 2017). Power calculations use a minimum effect size which is the smallest level of impact that the study aims to find. If the study design has clusters, power calculations become more complex but can still be completed using a simple formula. Power calculations need to be performed separately for each outcome variable.

6. Timeline and budget

A clear timeline and budget should also be established. There are typically three evaluation phases in the project timeline: baseline, midline, and end-line. Regarding budget planning, Table 1 shows some of the key budget items for data collection.

Table 1. Budget items for data collection

| Examples of budget items | |

|---|---|

| Staff cost | Field coordinator, supervisor, enumerator, moderator (qualitative), translator…. |

| Training cost | Training venue, catering, training stipend for participants, accommodation |

| Transport | Car hire, fuel, driver, bus fare, motorcycle during training and data collection |

| Other | Tablets, incentives, printing of training material, communication/internet cost, venue for focus group discussions (qualitative) |

Source: 2020 LORTA Virtual Workshop presentation slides

Conclusion

In conclusion, researchers constantly need to revisit the assessment design due to changing conditions and emerging findings: impact evaluation is a process based on close communication with the field. The LORTA programme is founded on this spirit and provides approved GCF projects with technical and capacity-building assistance on impact evaluation design and data analysis. I wish all evaluators and project implementers success in verifying whether their projects are making the desired impact and to what extent.

References

Clark, E., & Taplin, D. (2012). Theory of change technical papers: A series of papers to support development of theories of change based on practice in the field.

Hempel, K., & Fiala, N. (2012). Measuring Success of Youth Livelihood Interventions: A Practical Guide to Monitoring and Evaluation, World Bank, Washington, DC.

Gertler, P. J., Martinez, S., Premand, P., Rawlings, L. B., & Vermeersch, C. M. J. (2016). Impact Evaluation in Practice, Second Edition, World Bank.

Rogers, P. (2014). Theory of Change, Methodological Briefs: Impact Evaluation 2, UNICEF Office of Research, Florence.

Richert, K., Parisi, D., Prowse, M., & Fiala, N. (2021). A Brief on the Potential Impact Evaluation Design for FP101 ‘Resilient Rural Belize’. Independent Evaluation Unit, Green Climate Fund, Songdo, South Korea.

White, H., & Raitzer, D.A. (2017). Impact evaluation of development interventions: A practical guide. Asian Development Bank.

https://www.theoryofchange.org/what-is-theory-of-change/toc-background/t...

Disclaimer: The views expressed in blogs are the author's own and do not necessarily reflect the views of the Independent Evaluation Unit of the Green Climate Fund.